One of the core value propositions of decentralized technologies such as Bitcoin and Ethereum is often stated as censorship resistance. Some have gone so far as to say that it’s the only value proposition that matters and determines whether a decentralized system can be advantageous relative to a centralized one, because otherwise the extra costs and complexity of using such systems wouldn’t be worth it relative to efficient centralized alternatives. With the current events unfolding in the world, and the centralized platforms responses to these events being to ban users and limit what may be communicated, many have looked to the set of decentralized technologies, collectively known as web3, to enable trustless platforms which make it impossible for this type of selective censorship to occur. Of course the chorus of financially motivated stakeholders in the projects see this as an opportunity for growth, attention, and “web3’s moment”, however I’d caution many parties here — the project builders themselves, their communities, their financial backers, and their users to tread cautiously. This is a responsibility that should be handled with care.

I work on a decentralized video infrastructure project called Livepeer, where our mission is to build the world’s open video infrastructure. In the early days of the project I spent a lot of time talking about the positive benefits of an open video platform, such as enabling developers to build platforms that protect live journalism, freedom of speech, and community governed content policies. I still very much think these are great ideals to aspire to. But, many users unable to recognize the difference between an infrastructure platform and a consumer application, mistakenly believed that Livepeer was building something that looked like a censorship resistant Youtube — where individual streamers could show up and begin broadcasting their content.

The dark side of censorship resistance began to expose itself, as many of those unhappy with the existing centralized streaming platforms, were looking to use an alternative for a purpose that may look familiar to challenges that our society is asking the large social platforms like Facebook, Twitter, and Twitch to police today — the spread of misinformation, coordination of violence, even copyright infringement which seems quaint by comparison. I spent time with 3rd party rights protection groups like Thorn.org, which work tirelessly to protect children from abuse that occurs to them via the internet. These things aren’t freedom of speech or censorship issues, they are actually legal violations, life-altering level harmful, and potentially risks to society.

While any large platform that coordinates large groups of people across a wide cross-slice of humanity ends up having to deal with these challenges, it is especially exacerbated in the live video streaming world. Content in a live stream is typically consumed within seconds of its creation, leaving things like community moderation, potentially insufficient to provide the protections needed to prevent the harm that can come.

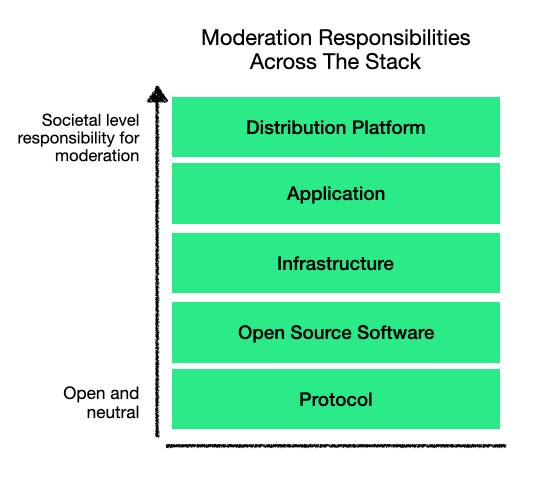

Still, despite awareness of the risks posed by censorship resistance and the harm that can come, do we want to live in a world where all distribution is controlled by a handful of Silicon Valley insiders? A world where, with the snap of a finger, any idea that doesn’t align with a single ideology, or a single country’s laws, can be eliminated from public view? Unlikely. So what is the middle ground that we should strive for? And how does an open video infrastructure like Livepeer view what it is doing, in light of the many of the above challenges and potential? Like any complex and nuanced issue, the answer isn’t straightforward, but my view of this is that one must look closely at the shifting responsibilities across the layers of the stack, and then apply an appropriate level of control and optionality based upon where one is building.

The level of responsibility, control, and moderation that one has for harmful use that can result, starts very low at the bottom of this stack, but must increase as one moves up the stack. While protocol definitions should be neutral, open, and un-opinionated at the bottom, the distribution layers for content at the top bear massive responsibility to ensure that limited damage can come from content going from 0 to 2 billion eyeballs in a matter of seconds as enabled by the power-law scale of social platforms today.

Livepeer builds primarily at the bottom three layers of this stack, so let’s take a bit of a closer look at each layer, with some commentary on how I think about Livepeer’s role and responsibilities at each.

Protocol Layer

At the base layer, protocols should be neutral, open, and un-opinionated. Protocols like TCP/IP, Bitcoin, or HLS are tremendously powerful building block which can enable countless upstream use cases, and even though one could connect the dots to both beneficial and harmful applications in the future, the protocol designers bear little to no responsibility for what people choose to do or not do with it at much higher layers. It would be foolhardy and overcomplicated to try to embed controls at a layer responsible for moving packets of data across routers, establishing a shared ledger of account balances for an asset, or reliable delivery of live video.

The Livepeer protocol definition specifies a marketplace that compute resources can advertise their available services, be compensated for work by the users of those services, and ensure that the services were performed correctly. Initially, transcoding and other forms of compute for live video are the primary services. Simple and straightforward, there is no emphasis on controls for censorship or moderation at this layer.

Software Layer

The open source software that implements these protocols, bears just slightly more responsibility. Here, we go from protocol definition, to implementation, and often complimentary features and enhancements targeted at certain user groups. Flexible by nature, it is hard to anticipate its use, and responsible builders will enable options and controls to give users the abilities to make their own decisions about the level of moderation they’d like to apply to content.

In the Livepeer node implementation, you still see generic and open feature sets to enable video streaming broadly. You don’t see features targeted towards users building hate-speech applications, or copyright violation applications. But you do see the beginnings of controls that can ensure that no one running the software will have to participate in hate speech or copyright violations if they choose note to — for example the ability for the software to let the user limit its use to encode video only on behalf of known sources of video.

Infrastructure Layer

Up at the infrastructure layer you have entities running the software, at scale, to be the reliable building blocks for platforms. Here it becomes the infrastructure operators responsibilities to USE the above controls from the software layer, to create policies that align with their ideologies and intentions. Yet still, infrastructure is meant to be open, cost effective, efficient, and generic for a variety of use cases.

In a decentralized network like Livepeer, the community has the opportunity to think about network-wide assistance that can be given to individual node operators, such as the ability to tap into known 3rd party moderation lists, to prevent their own resources from being used for purposes that they don’t want to be part of supporting. Smart video capabilities like scene classification can be used to allow nodes to automatically reject working on certain types of content. Running a Livepeer node does not mean consenting to contribute one’s resources for harmful purposes.

Application Layer

As you push above the infrastructure layer to the application layer, the level of responsibility increases exponentially. Applications serve as the point of creation and access for content. They are built for specific audiences, communities, or content types. They have their own terms of use policies, and corporate or community enforcement of rules. And these rules matter and have real consequences. If an application level policy is censorship resistant live video, then prepare for the challenges that come with the dark side.

At Livepeer we stayed below this layer for the first 2.5 years of the project, building only up to infrastructure. And as we did push up to the application layer in the past year, we avoided being a content application, and instead launched a SaaS streaming service for developers to use to build their own apps — essentially a gateway to the infrastructure. While the infrastructure, software, and protocol are all open as described above, at the application is the first layer where we put in a firm stance on moderation and censorship resistance. The centralized livepeer.com API has a terms of use policy which prevents use cases that are illegal, hateful, or inciting violence. That is our decision, but it’s also the layer at which the stack stops becoming open. Someone else could build an open and decentralized application at this layer that makes different choices, and still leverage the infrastructure, software, and protocol below — though potentially without access to the nodes that choose not to support it.

Distribution Layer

Finally, at the top of the stack you have the distribution layers, which despite not being directly associated with the applications, they profit from the apps, and they bear a societal level of responsibility for their policies on moderation and censorship resistance. App stores and large social platforms that virally spread content created by applications, and their attention economy supported business models, which have taken advantage of network effects to enable reaching full-societal-scale reach, have no choice but to recognize the level of responsibility that they have in making a best effort to ensure that tremendous harm doesn’t come from the content that they are amplifying. It doesn’t matter if Facebook runs on in house infrastructure, EC2, or Livepeer — they have a higher level of responsibility to make sure that violence-insighting content doesn’t reach two billion users within minutes of its creation. Apple has a responsibility to make sure that applications that are abusive of minors aren’t accessible to two billion people through its app store.

— —

As seen from above, the infrastructure layer appears most grey, and it’s also where the majority of users of Livepeer operate. There are tricky problems here, as I believe an open compute infrastructure, enables tremendous good and opportunity for the world. It can be the building block for ownership economy applications that better align incentives and economic relationships between creators, consumers, and platforms, than the attention-economy models that have lead us to where we are today on the large social platforms.

But at the same time, the Livepeer community needs to have a high level of awareness and responsibility to ensure that what we build can contribute to positive societal outcomes. As an open network, this is community determined, rather than centrally determined, and you can imagine why the interpretation may be different across ideologies or jurisdictions. As outlined above though, the approach has been to plan and account for the configuration touchpoints, governance mechanisms, and tools to let the network operators decide what they are contributing their compute resources to. On the tool front this includes things like configurable controls for which video sources one is working with, and even nascent machine learning research on scene classification that can identify whether content is violent, explicit, or likely to be copyrighted.

Regardless of the best efforts at the infrastructure or software layer however, applications and distribution channels will need to account for their own community and content moderation from day one, lest they run into inevitable challenges as they scale and things get messy. While I have, and continue to talk about the benefits of building applications that empower the positive types of free and open communication, I do not believe that the policy of complete censorship resistance of live video, with wide distribution leads to a lighter place than the darkness enabled by abuse of such systems. I hope that those building across the spectrum from protocol through to distribution, join me in taking accountability and responsibility seriously to ensure that we all build things that enable the positive future we want to see exist.